for policy makers: Overall, the process of ordering a cheeseburger is a complex interplay of linguistic semantics, social norms, and cultural expectations aimed at facilitating a successful transaction between the customer and restaurant staff. The art of ordering a cheeseburger is akin to a complex dance between linguistic acrobatics, social pitfalls, and the pursuit of the perfect serving of happiness nestled between two bun halves. One must decipher the language of menus, master the hidden codes of „please“ and „thank you,“ all while remaining as cool as a cucumber to earn the respect of the restaurant staff. It’s a journey fraught with challenges, but when one succeeds in ordering a cheeseburger, it’s not just a meal—it’s an epic triumph over culinary tides!

👨🍳 (Semantic) ingredients

What in Midjourney (closed source see below) leads to the target as prompt keys such as F.R.A.M.E., S.S.S.C.L.A., or F.O.C.A.L. is COSTAR in GPT4: Context: the background information, Objective: target for LLM to focus on, Style: writing style, Tone: response attitude, Audience: the intended receiver, Response: format of response. (For beyond semantics I recommend this article.)

In order not to be seen as biased, I would also like to present some open-source licensed alternatives here: Render Net, Realistic Vision, Absolute Reality, RealVisXL, Epic Realism, NextPhoto, CyberRealistic, NightVisionXL.

By the way concerning my recent post on Midjourney’s new consistent characters there is a nice follow up on Multi Character Consistency and Character Blending @HalimAlrasihi.

A1) 100% free range or bio barn beef – FOUNDATION Models

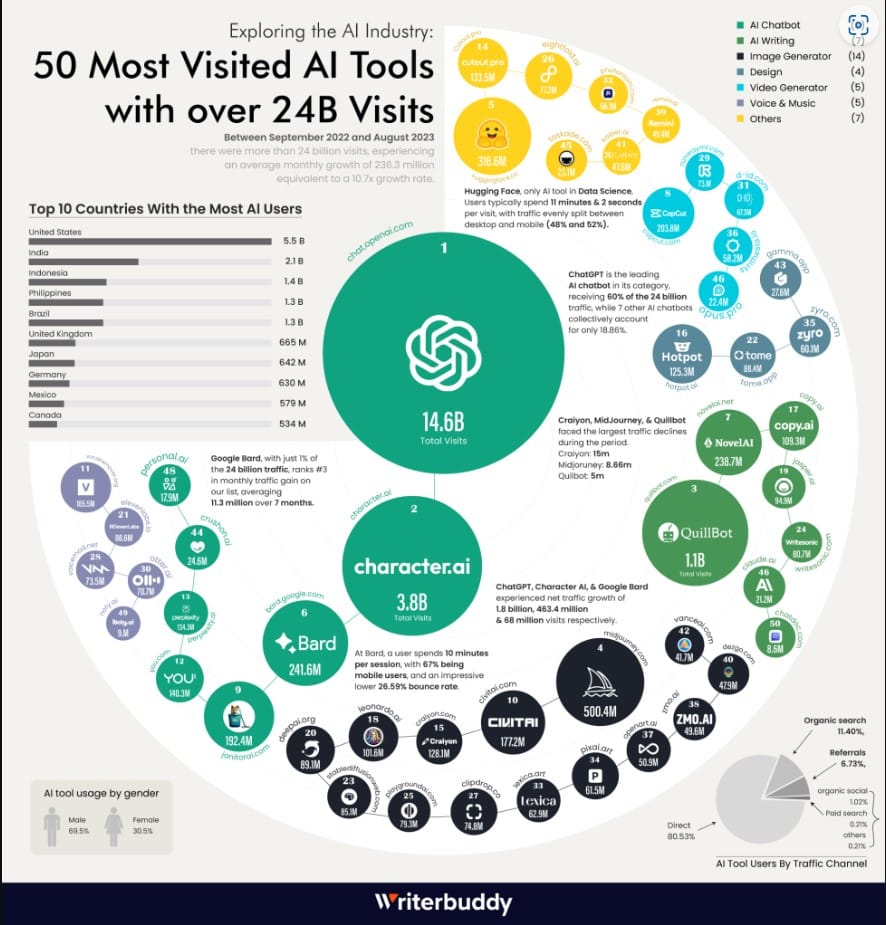

(see my blog, kopie-von-midjourneyii, Textgeneratoren); [Of course, country-specific everything refers to the US market dominance of this technology (Open AI is dominant in China.]

| The Business of writing foundation models (LLMs) | |

| branded as | |

| Open AI | Closed AI |

| company OpenAI (chat GPT – Sam Altman), Microsoft (+LinkedIn), Musk (Twitter/X-xAI/SpaceX) | Gemini (ex-Bard = Google), Meta AI (Zuckerberg), Claude |

| Chat GPT (Cofounded by Musk in 2015 who left board in 2018) originally nonprofit, meanwhile for profit = not so OpenAI, largest model GPT 4 – the key differentiator is that its parameter count is more than 170 trillion. ChatGPT4 powers Microsoft Bing’s AI, Musk’s Grok is based on GPT-3.5, Grok-1 LLM is on 63-billion-parameter) | |

Grok in and of itself is not a (foundation) LLM, so what qualifies Grok-1 as an LLM? Grok is a generative model (see below) and a LLM application, an app so to speak. However, the aera of LLMs is over, but that’s not stopping OpenAI from developing GPT-5 (potential for AGI status, see below God News).

Musk vs. Altman is a LLM application dispute and at the same time a mutiny on the horizontal and vertical AI “Bounty” (see B)a) and b) below). Well, for grokening in Austria you need this for the time being in order to have a reference point for the dispute.

🌋God News: AGI (self-controlled AI), the Q-Project

🚀 19/03/2024: xAI Open Sources Grok-1 Base Code launches (downloadable on Github without licensing fees, users can build on it now). And with this move, every country in the world can and must have an opinion about the dispute.

- Grok AI, a 314 billion parameter model, is open-sourced (see the 63 at the left column above – it happens rapidly)

- Licensed under Apache License 2.0 for commercial use

- No training code is included with the release.

- Grok-1 was trained on a custom stack.

- Potential use for conversational search by Perplexity AI (Traded as the new Google search, the Swiss army knife. But do not panic, Google is here to stay).

- Elon Musk criticizes OpenAI and aims to uphold non-profit AI ideals.

💸Note: Musk‘s new license implementation is also eagerly awaited.

A2) BEEF FOR DUMMIES – FOUNDATION MODELS FOR DUMMIES

Anyone who keeps or has kept a household book can now understand what foundation models are. All you need is a table. So please sit down, relax and look at the table/spreadsheet (watch the lessons for the aha moment). And the Oscar for ELI5 goes to

B) Spices, pickles, tomatoes, side dishes and sauces – THE SERVICES SOLD ON THE FOUNDATION MODELS a) vertical and b) horizontal services

Vocabulary:

- SaaS – Software as a Service

- Horizontal SaaS is a type of cloud software solution aimed at a wide audience of business users, regardless of their industry.

- Vertical SaaS solutions include software aimed at a specific niche or industry-specific standards. This is a recent trend in the evolution of the SaaS market. Because vertical software is designed specifically for clear industry niches, it limits the size of the potential market.

- On Top SaaS Targeted and/or industry-neutral software designed to integrate with an existing solution or multiple solutions (e.g. Zapier). To this end, brands have started building proprietary software ecosystems known as PaaS (Platform as a Service). By using PaaS platforms, SaaS companies can build new products that help them enter specific industries while working alongside a consolidated brand.

- Spices and sauces/Generative models: Generative AI, short for Generative Artificial Intelligence, is capable of generating content that is similar to the data it was trained on – from text to images to music. The potential is impressive, but generative AI also presents challenges and ethical concerns, particularly around the authenticity and potential misuse of the generated content. Generative AI models use a specific neural network to generate new content. Depending on the application, these include: a) Generative Adversarial Networks (GANs): GANs consist of a generator and a discriminator and are often used to generate realistic images. b) Recurrent Neural Networks (RNNs): RNNs are specifically designed to process sequential data such as text and are used to generate text or music. c) Transformer-based models: Models like OpenAI’s GPT (Generative Pretrained Transformer) are transformer-based models used for text generation (for hot news on transformers see). d) Flow-based models: Used in advanced applications to generate images or other data. e) Variational Autoencoders (VAEs): VAEs are often used in image and text generation.

a) Vertical – raw models (link)

For example: A company finds candidate models for their vertical industry or problem type or both from a marketplace and runs some A/B tests. Once the model is selected, it would be pointed a finite corpus of data. For instance, a language model utilized for summarizing transactions, texts, emails and contracts for compliance violations could target data that is wholly contained within the boundaries of the enterprise and in some cases might be supplemented with selected sets from data marketplaces. That data would likely be stored as vector embeddings (numerical representation of data objects) and made accessible through specialized vector indexes that reside in specialized vector databases, or the vector data stores of the operational databases that they are already using. In turn, language patterns and terminology would be trained on much more finite vocabularies and ontologies.

Mainstream enterprises are not OpenAI or alike. They are not in the business of writing foundation models, and they’re not burning venture capital cash to train them. They shouldn’t need access to tens of thousands of graphics processing units. But to prevent hallucinations and stay focused on their own problems and their ontologies and terminologies, it will be smarter not to train the models they implement on the whole of the internet. Instead, they will likely restrict training data to their own sets and, optionally, relevant third-party real or synthetic sets from data marketplaces, and keep them updated and relevant with retrieval-augmented generation, or RAG. When it comes to language models trained specifically for the business, the meek (smaller domain-specific models) are more likely to inherit the earth. We’re talking about training models on millions rather than billions of parameters.

Companies need to identify the use cases and figure out how to address governance, bias and intellectual property issues. We won’t go into governance in detail here, but the problem is far more opaque and the stakes (particularly with deep fakes, copyrights and security) are broader and deeper than what we’ve talked about in „classic“ machine learning models. In the meantime, the industry needs to put the ecosystem and safeguards together.

But that’s not going to happen overnight. It will likely be at least a couple years before we see a critical mass of domain-specific models (although concerning traffic they are largely already applied) and best practices for sizing them for us (or them) to see significant impact. But it will happen.

b) Horizontal services

are delivered as prepackaged software-as-a-service applications that are priced at the costs that enterprises will bear. Like machine learning before it, for most enterprises gen AI will surface as applications, not raw models.

The most impactful development will be toward AI models that hide in plain sight. They will be embedded in horizontal services such as document aggregation, BI and visualization tools, as well as in enterprise applications that we already know from household names such as Adobe Inc., Salesforce Inc., Zoom Video Communications Inc., etc. Microsoft Copilot for Office 365 is arguably the most prominent flagship, while SAP Joule embodies the types of task-specific copilots (this one for workforce optimization) that we’ll see in enterprise applications. This parallels how we’ve seen how background machine learning has powered predictive analytics in enterprise applications over the years like Oracle Fusion Analytics.

The common denominator is that companies do not need to go into depth to train models as these are delivered as pre-built software-as-a-service applications.

From late autumn 2023 until the New Year, the market for desktop users was flooded with specific applications. Right now, it’s the turn of the mobile market, it’s unbelievable how creative you can be with your cell phone since the beginning of the year

Gen AI is being seen as the top emerging technology over the next three to five years. And with it comes steep expectations for economic impact. As you can probably guess by the sound of it, generative AI is the branch of artificial intelligence focused on creating new content in the form of humanlike text, images, video, answers to questions, voice, even lines of code.

What concerns are there about Generative AI? There are a number of concerns associated with the use of Generative AI. In addition to the quality of the content generated, these also affect the possibility of misuse.

- Abuse and disinformation: The ability of generative AI to produce realistic content can be abused, e.g. B. for deepfakes, fake news, fictitious documents and other forms of misinformation.

- Copyright and intellectual property: Generated content raises copyright and intellectual property issues because it is often unclear who owns the rights to the generated content and how it may be used.

- Bias and discrimination: If a Generative Artificial Intelligence has been trained on biased data, these may be reflected in the content generated.

- Ethics: Creating false content and manipulated information can raise ethical questions.

- Legal and regulatory issues: The rapid development of generative AI has led to an unclear legal situation; there is uncertainty about how the technology should be regulated.

- Data protection and privacy: The use of Generative AI to generate personal data or identify people in images is questionable in terms of data protection and privacy.

- Security: Generative AI can be used for social engineering attacks that are more effective than human attacks.

🏞🌄🌅 Gabriele2500 Panorama: For one thing, unlike to overseas we don’t have a total solar eclipse in this part of the world in April 2024, our next partial one is in March 2025 and the next total is scheduled for 2081.

Topically relevant trend news: Call me Datty

Regarding voting preferences, I have to say it’s not my choice, but we have one this year too. I thought about the US and came to the conclusion to ask myself who wears his jeans best, knowing that as long as Americans seek comfort, style and self-expression, the timeless love story between America and jeans will last for generations. And within this narrative of fashion and freedom, for me there is an undisputed but controversial champion who seamlessly combines sport, comfort, and style. As far as Austria is concerned, the answer is not so simple. Every candidate looks pretty decent in their jeans. But what would their style look like if they had to wear their own style as fashion? Due to the candidates‘ lack of interest in taking on my fashion challenge, this narrows down my choice of candidates to a maximum of 2. In fact, I have already made my choice irrefutably.

Personally, I’m currently facing a few challenges – as always – including, among other things, determining the figure’s name. The character’s character is based on Blues Brothers‘ Queen Mousette, except that she makes the universe sing. Not that that isn’t the case anyway, the earth is supposed to be the G tone, we just can’t hear it.

Until then, I’ll console myself with a little cake. Don’t forget that at Easter the bells fly to Rome and Happy Holidays. 🐇 🐤 🐣 🧺 🌷 🦋 ❤️

Hinterlasse einen Kommentar